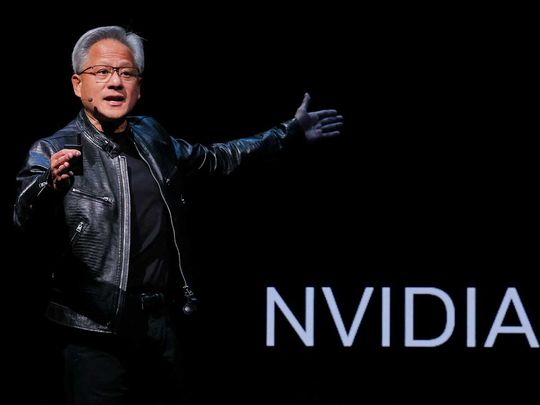

Nvidia has been the main beneficiary of a massive flood of AI spending, helping turn the company into the world’s most valuable chipmaker. But it now looks to broaden its customer base beyond the handful of cloud-computing giants that generate much of its sales. As part of the expansion, Huang expects a larger swath of companies and government agencies to embrace AI – everyone from shipbuilders to drug developers. He returned to themes he set out a year ago at the same venue, including the idea that those without AI capabilities will be left behind.

“We are seeing computation inflation,” Huang said on Sunday. As the amount of data that needs to be processed grows exponentially, traditional computing methods cannot keep up and it’s only through Nvidia’s style of accelerated computing that we can cut back the costs, Huang said. He touted 98% cost savings and 97% less energy required with Nvidia’s technology, saying that constituted “CEO math, which is not accurate, but it is correct.”

Rubin AI platform will use HBM4

Huang said the upcoming Rubin AI platform will use HBM4, the next iteration of the essential high-bandwidth memory that’s grown into a bottleneck for AI accelerator production, with leader SK Hynix Inc. largely sold out through 2025. He otherwise did not offer detailed specifications for the upcoming products, which will follow Blackwell.

Nvidia got its start selling gaming cards for desktop PCs, and that background is coming into play as computer makers push to add more AI functions to their machines.

Microsoft Corp. and its hardware partners are using Computex to show off new laptops with AI enhancements under the branding of Copilot+. The majority of those devices coming to market are based on a new type of processor that will enable them to go longer on one battery charge, provided by Nvidia rival Qualcomm Inc.

While those devices are good for simple AI functionality, adding an Nvidia graphics card will massively increase their performance and bring new features to popular software like games, Nvidia said. PC makers such as Asustek Computer Inc. are offering such computers, the company said.

To help software makers bring more new capabilities to the PC, Nvidia is offering tools and pretrained AI models. They will handle complex tasks, such as deciding whether to crunch data on the machine itself or send it out to a data center over the internet.

Nvidia is releasing a new design for server computers

Separately, Nvidia is releasing a new design for server computers built on its chips. The MGX program is used by companies such as Hewlett Packard Enterprise Co. and Dell Technologies Inc. to allow them to get to market faster with products that are used by corporations and government agencies. Even rivals Advanced Micro Devices Inc. and Intel Corp. are taking advantage of the design with servers that put their processors alongside Nvidia chips.

Earlier-announced products, such as Spectrum X for networking and Nvidia Inference Microservices – or NIM, which Huang called “AI in a box” – are now generally available and being widely adopted, the company said. It’s also going to offer free access to the NIM products. The microservices are a set of intermediate software and models that help companies roll out AI services more quickly, without having to worry about the underlying technology. Companies that deploy them then have to pay Nvidia a usage fee.

Huang also promoted the use of digital twins in a virtual world that Nvidia calls the Ominverse. To show the scale possible, he showed a digital twin of planet Earth, called Earth 2, and how it can help conduct more sophisticated weather pattern modeling and other complex tasks. He noted that Taiwan-based contract manufacturers such as Hon Hai Precision Industry Co., also known as Foxconn, are using the tools to make plans and operate their factories more efficiently.