A new Google Search AI feature helped boost its first quarter revenues. Now it’s the laughing stock of the internet.

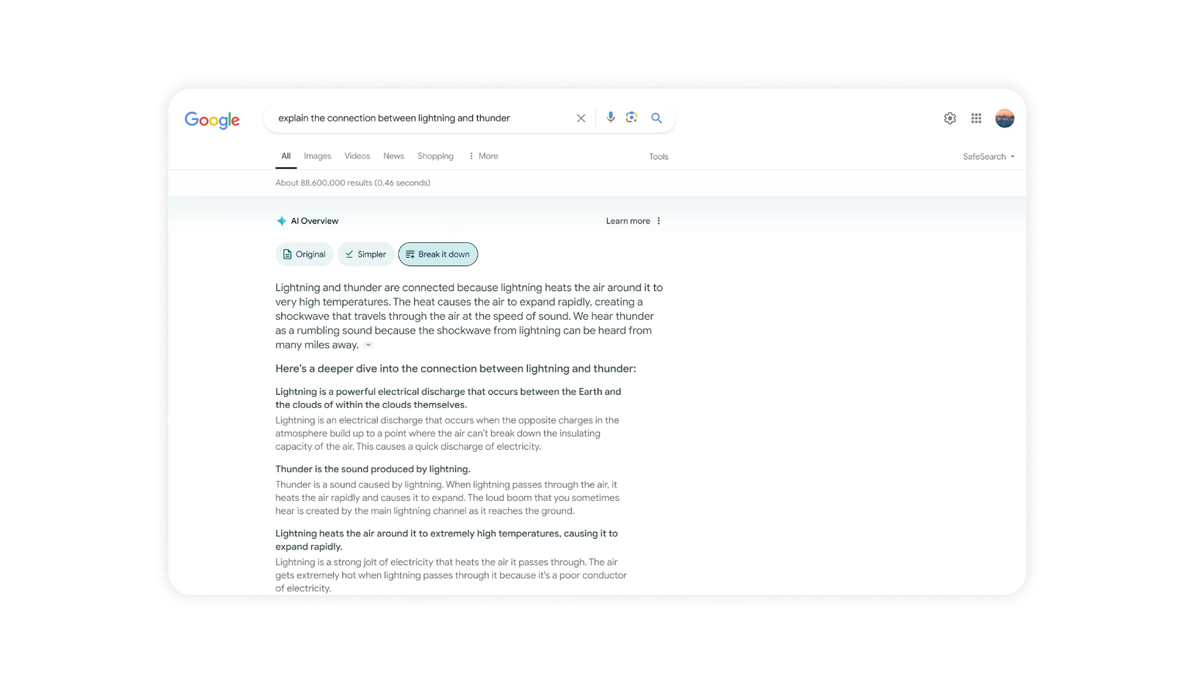

Google officially rolled out a generative artificial intelligence feature for its search engine called AI Overviews during its annual I/O developer conference last week. The tool gives users a summary of information from the world wide web in response to their Search queries. Sometimes it’s taking silly online information too seriously; otherwise it’s generating wildly bad, wrong, weird — even dangerous — new responses on its own.

Redditers and X users have flooded the internet to provide examples of AI Overviews’ blunders. Some of the tool’s sillier results propose adding glue to pizza and eating one rock a day. Then, there are more dangerous ones: AI Overviews suggested that a depressed user jump off the Golden Gate Bridge and said that someone bitten by a rattlesnake “cut the wound or attempt to suck out the venom.”

Those responses, sourced from satirical news outlet The Onion and Reddit commenters, stated jokes as facts. In some cases, it’s ignoring facts from the web altogether, making incorrect statements like that there’s “no sovereign country in Africa whose name begins with the letter ‘K.’” AI Overviews is also getting things wrong about its very own maker, Google. It said Google AI models were trained on “child sex abuse material” and that Google still operates an AI research facility in China.

This is the second major AI-related embarrassment to Google. The company had to hit pause on its AI chatbot Gemini when it was criticized for producing historically inaccurate images. But Google’s in good company: chatbots from most companies have been cited for so-called hallucinations, making up wildly wrong information. An outage at OpenAI in February had ChatGPT spurting out gibberish. Even when operating at full capacity, ChatGPT has falsely accused people of sexual harassment, generated citations of nonexistent court cases, Microsoft Copilot suggested self-harm to a user at great lengths in March.

AI’s potential for generating misinformation and disinformation has caused widespread concern, especially ahead of the U.S. 2024 presidential election. While state lawmakers have introduced hundreds of laws to tackle the problems associated with AI, no substantive federal legislation exists.