This week, Google unveiled its AlphaChip reinforcement learning method for designing chip layouts. The AlphaChip AI promises to substantially speed up the design of chip floorplans and make them more optimal in terms of performance, power, and area. The reinforcement learning method, now shared with the public, has been instrumental in designing Google’s Tensor Processing Units (TPUs) and has been adopted by other companies, including MediaTek.

Chip design layout, or floorplan, has traditionally been the longest and most labor-intensive phase of chip development. In recent years, Synopsys has developed AI-assisted chip design tools that can accelerate development and optimize a chip’s floorplan. However, these tools are pretty costly. Google wants to democratize this AI-assisted chip design approach somewhat.

Nowadays, designing a floorplan for a complex chip — such as a GPU — takes about 24 months if done by humans. Floorplanning of something less complex can take several months, meaning millions of dollars in costs, as design teams are usually quite significant. Google says that AlphaChip accelerates this timeline and can create a chip layout in just a few hours. Moreover, its designs are said to be superior as they optimize power efficiency and performance. Google also demonstrated a graph showing wire length reduction across various versions of TPUs and Trillium compared to human developers.

AlphaChip uses a reinforcement learning model in which the agent takes actions in the pre-set environment, observes the outcomes, and learns from these experiences to make better choices in the future. In the case of AlphaChip, the system views chip floorplanning as a kind of game that places one circuit component at a time on a blank grid. The system improves as it solves more layouts, using a graph neural network to understand the relationships between components.

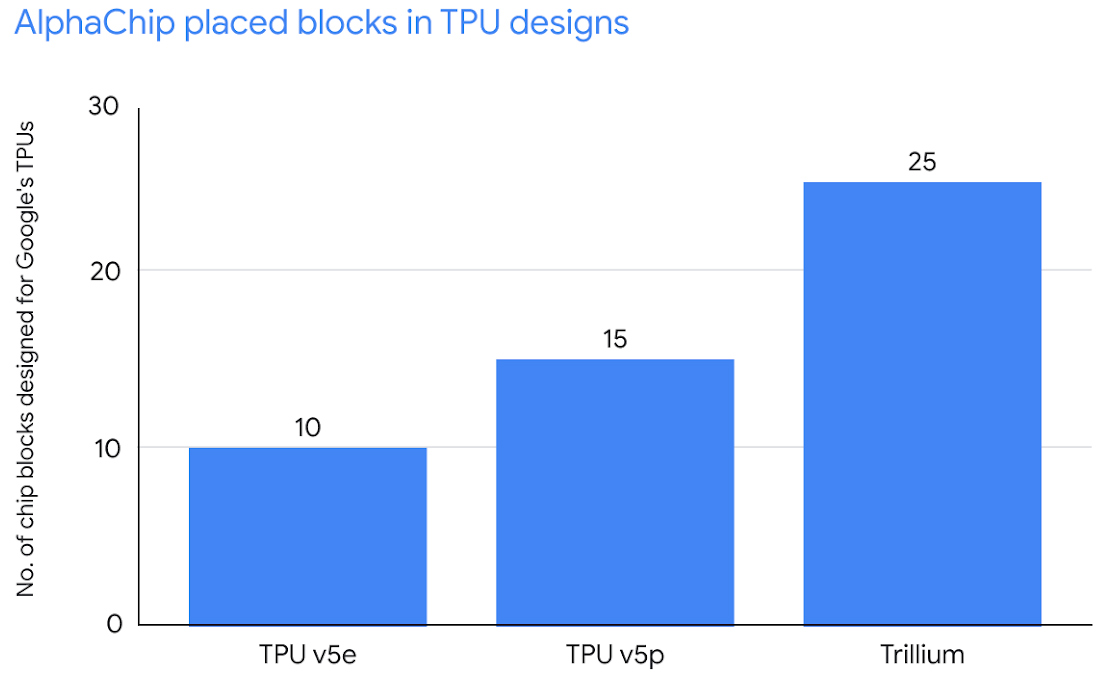

Since 2020, AlphaChip has been used to design Google’s own TPU AI accelerators that drive many of Google’s large-scale AI models and cloud services. These processors run Transformer-based models powering Google’s Gemini and Imagen. AlphaChip has improved the design of each successive generation of TPUs, including the latest 6th Generation Trillium chips, ensuring higher performance and faster development. Still, both Google and MediaTek rely on AlphaChip for a limited set of blocks, and human developers still do the bulk of the work.

By now, AlphaChip has been used to develop a variety of processors, including Google’s TPUs and MediaTek’s Dimensity 5G system-on-chips, which are widely used in various smartphones. As a result, AlphaChip is able to generalize across different types of processors. Google says it has been pre-trained on a wide range of chip blocks, which enables AlphaChip to generate increasingly efficient layouts as it practices more designs. While human experts learn, and many learn fast, the pace of learning of a machine is orders of magnitude higher.

Extending usage of AI for chip development

Google says AlphaChip’s success has inspired a wave of new research into using AI for different stages of chip design. This includes extending AI techniques into areas like logic synthesis, macro selection, and timing optimization, which Synopsys and Cadence offer already, albeit for a lot of money. According to Google, researchers are also exploring how AlphaChip’s approach could be applied to even further stages of chip development.

“AlphaChip has inspired an entirely new line of research on reinforcement learning for chip design, cutting across the design flow from logic synthesis to floorplanning, timing optimization and beyond,” a statement by Google reads.

Looking ahead, Google sees potential in AlphaChip to revolutionize the entire chip design lifecycle: from architecture design to layout to manufacturing, AI-driven optimization could lead to faster chips, smaller (i.e., cheaper), and more energy-efficient. While for now, Google’s servers and MediaTek Dimensity 5G-based smartphones benefit from AlphaChip, applications may broaden to pretty much everything in the future.

Future versions of AlphaChip are already under development, so stay tuned for even more AI-driven chip designs.