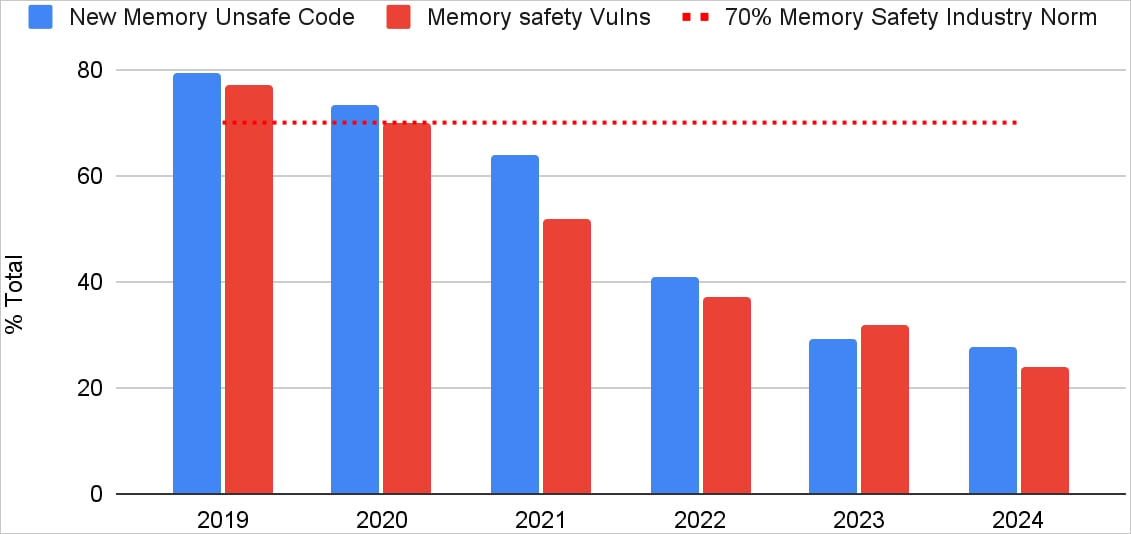

The percentage of Android vulnerabilities caused by memory safety issues has dropped from 76% in 2019 to only 24% in 2024, representing a massive decrease of over 68% in five years.

This is well below the 70% previously found in Chromium, making Android an excellent example of how a large project can gradually and methodically move to a safe territory without breaking backward compatibility.

Google says it achieved this result by prioritizing new code to be written in memory-safe languages like Rust, minimizing the introduction of new flaws with time.

At the same time, the old code was maintained with minimal changes focused on important security fixes rather than performing extensive rewrites that would also undermine interoperability.

“Based on what we’ve learned, it’s become clear that we do not need to throw away or rewrite all our existing memory-unsafe code,” reads Google’s report.

“Instead, Android is focusing on making interoperability safe and convenient as a primary capability in our memory safety journey.”

Source: Google

This strategy makes older code mature and becomes safer over time, reducing the number of memory-related vulnerabilities in it regardless of what language it was written in.

These two pillars in the Android building strategy had a synergistic effect towards the dramatic decrease of memory flaws in the world’s most widely used mobile platform.

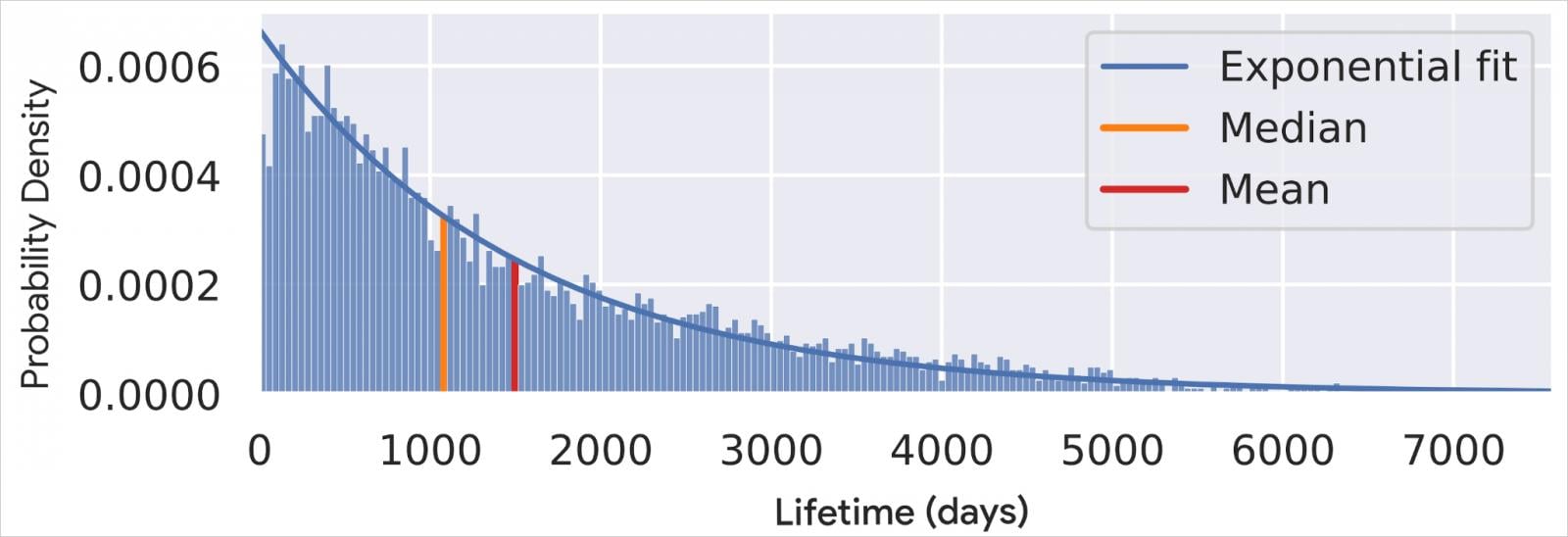

Google explains that, while it may seem risky to leave older code essentially unchanged and though new code is expected to be better tested and reviewed, the opposite is happening, despite how counter-intuitive it may seem.

This is because recent code changes introduce most flaws, so new code almost always contains security problems. At the same time, bugs in older code are ironed out unless developers perform extensive changes to it.

Source: Google

Google says that the industry, including itself, has gone through four main stages in dealing with memory safety flaws, summarized as follows:

- Reactive patching: Initially, the focus was on fixing vulnerabilities after they were discovered. This approach resulted in ongoing costs, with frequent updates needed and users remaining vulnerable in the meantime.

- Proactive mitigations: The next step was implementing strategies to make exploits harder (e.g., stack canaries, control-flow integrity). However, these measures often came with performance trade-offs and led to a cat-and-mouse game with attackers.

- Proactive vulnerability discovery: This generation involved using tools like fuzzing and sanitizers to find vulnerabilities proactively. While helpful, this method only addressed symptoms, requiring constant attention and effort.

- High-assurance prevention (Safe Coding): The latest approach emphasizes preventing vulnerabilities at the source by using memory-safe languages like Rust. This “secure by design” method provides scalable and long-term assurance, breaking the cycle of reactive fixes and costly mitigations.

“Products across the industry have been significantly strengthened by these approaches, and we remain committed to responding to, mitigating, and proactively hunting for vulnerabilities,” explained Google.

“Having said that, it has become increasingly clear that those approaches are not only insufficient for reaching an acceptable level of risk in the memory-safety domain, but incur ongoing and increasing costs to developers, users, businesses, and products.

“As highlighted by numerous government agencies, including CISA, in their secure-by-design report, “only by incorporating secure by design practices will we break the vicious cycle of constantly creating and applying fixes.”

Last June, the U.S. Cybersecurity and Infrastructure Security Agency (CISA) warned that 52% of the most widely used open-source projects use memory-unsafe languages.

Even projects written in memory-safe languages often depend on components written in memory-unsafe languages, so the security risk is complicated to address.

CISA recommended that software developers write new code in memory-safe languages such as Rust, Java, and GO and transition existing projects, especially critical components, to those languages.