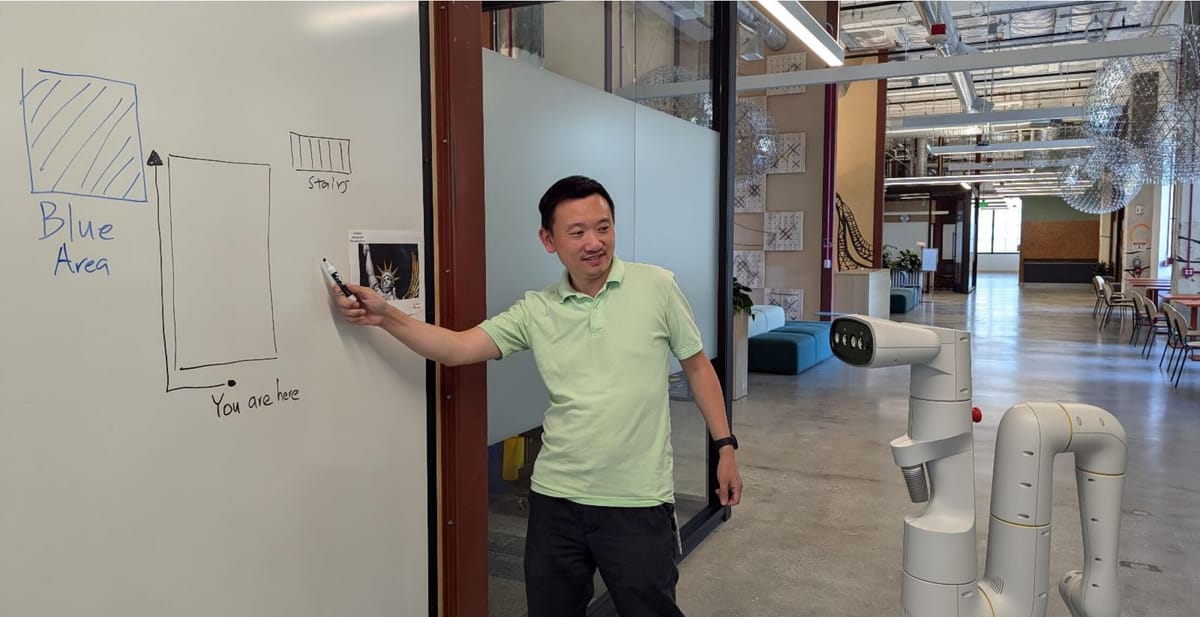

In a new research paper, Google’s DeepMind robotics team show how they are training robots to navigate and complete tasks using Gemini 1.5 Pro’s long context window, marking a significant step forward for AI-assisted robots.

Gemini 1.5 Pro’s long context window allows the AI model to process a far greater amount of information than its predecessors. This capability enables the robot to “remember” and understand its environment, making it more adaptable and flexible. The DeepMind team is putting this to clever use: they’re making robots “watch” video tours of places, just like a person would.

This “long context window” lets the AI process and understand vast amounts of information in one go. It’s a game-changer for how robots can learn about and interact with their environment.

Here’s their process:

- Researchers film a tour of a place, like an office or home.

- The robot, powered by Gemini 1.5 Pro, watches this video.

- The robot learns the layout, where things are, and key features of the space.

- When given a command later, the robot uses its “memory” of the video to navigate.

For example, if you show the robot a phone and ask, “Where can I charge this?” it can lead you to a power outlet it remembers from the video.

The team tested these Gemini-powered robots in a huge 9,000-square-foot area. The robots nailed it, successfully following over 50 different instructions 90% of the time. That’s a big jump in how well robots can get around complex spaces. The potential applications are endless, from assisting the elderly to enhancing workplace efficiency.

The robots might be able to do even more than just navigate. The DeepMind team has early evidence that these robots can plan out multi-step tasks.

For example, a user with empty soda cans on their desk asks if their favorite drink is in stock. The robot figures out it needs to:

- Go to the fridge

- Check for the specific drink

- Come back and report what it found

This shows a level of understanding and planning that goes beyond simple navigation.

Of course, there is still a lot of room for improvement—for example, it takes the system 10 to 30 seconds to process each instruction. That’s too slow for real-world use. Plus, they’ve only tested in controlled environments, not the messy, unpredictable real world.

But the DeepMind team isn’t stopping. They’re working on making the system faster and able to handle more complex tasks. As this tech gets better, we might eventually have robots that understand and move through our world almost like humans do.