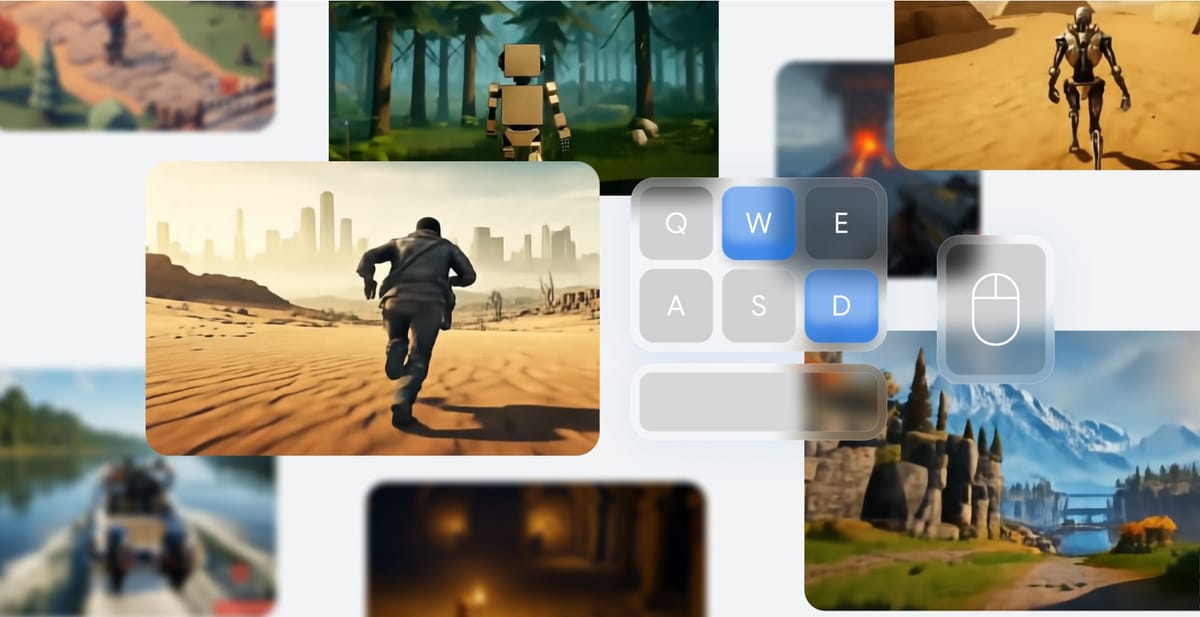

Google DeepMind has unveiled Genie 2, a foundation AI model that transforms single images into playable 3D environments.

Key points:

- Genie 2 creates interactive 3D worlds from single images, playable for up to a minute

- The model demonstrates advanced capabilities including physics, lighting, and NPC behavior

- DeepMind successfully integrated its SIMA agent to operate within Genie 2’s generated worlds

- The technology could revolutionize AI training and rapid game prototyping

Driving the news: Google DeepMind has entered an increasingly competitive AI world-building race with Genie 2. The technology is seen as being crucial for training robots and building more capable AI systems. Earlier this week, we also got a first look at Fei-Fei Li’s company, World Labs, which is working on a similar project. In October, Israeli startup Decart showcased their world model, Oasis.

World Labs’ AI System Can Generate a 3D World from an Image

World Labs’ system creates persistent 3D environments that maintain consistency as users explore them from different angles.

Unlike Decart’s Oasis, which struggles with resolution and level layout retention, Genie 2 can maintain scene consistency and accurately remember off-screen elements. The model matches World Labs’ capabilities in spatial memory while adding more sophisticated interaction features.

Genie 2 creates diverse and rich 3D environments that are playable for up to a minute. Users can interact with elements like non-playable characters (NPCs), object physics, and complex environmental effects such as gravity and collision.

The details:

- Emergent Capabilities: Genie 2 goes beyond visual simulation, demonstrating complex character animations, realistic lighting, reflections, and even simulating physical forces. Whether it’s navigating ancient ruins or a futuristic loft, Genie 2 adds a new level of realism.

- Agent Training: Google DeepMind has integrated Genie 2 with the SIMA agent, allowing it to follow commands in generated environments. SIMA can explore, interact, and perform tasks like opening doors or navigating terrain—all based on prompts generated by Genie 2.

Meet SIMA: Google’s New AI that Can Play Video Games with You

The research is building towards more general AI systems and agents that can understand and safely carry out a wide range of tasks in a way that is helpful to people online and in the real world.

Zoom out: AI training has faced bottlenecks due to a lack of diverse, rich environments. Google DeepMind sees Genie 2 as a foundational tool to overcome these challenges, providing a wide variety of training scenarios for developing more generalized AI agents.

Between the lines: The technology behind Genie 2 combines large-scale video data and an autoregressive latent diffusion model to create these environments from simple inputs—like a scene from ancient Egypt or a sci-fi landscape. This rapid prototyping could revolutionize how designers, researchers, and developers create and interact with virtual worlds.

Why it matters for AI research: One of the challenges for AI agents is maintaining memory of the environment. Genie 2 can remember elements and maintain their positions even when they leave the user’s view, solving a key issue in the consistency of generated 3D spaces.

What’s next: Don’t get too excited—Genie 2 won’t be creating AAA video games just yet. Google has positioned it as a research and prototyping tool. It allows for rapid creation of rich environments, making it easier to evaluate AI in situations they haven’t been trained for.

- This launch aligns with Google’s broader push into generative AI and immersive technologies, aiming to blur the lines between digital and physical worlds.

- Future advancements in Genie could lead to AI agents adept at handling real-world challenges with unprecedented sophistication.

The bottom line: Google’s Genie 2 is a major leap towards making virtual worlds not just immersive but interactive and useful for both training AI and prototyping creative experiences. It’s an exciting step towards integrating the imagined into our reality—and one that could reshape how we interact with both AI and the virtual spaces they inhabit.