Ratcheting up the competition between its Vertex GenAI platform and the GPT platform built by OpenAI and deployed by Microsoft, Google Cloud says its new Gemini 1.5 model is superior to the comparable GPT model by 40% on speed, 4X on price, and 60X on context-window capacity.

While I realize that, among businesspeople, these types of relatively deep-tech comparisons can trigger episodes of IESG (Instant Eye-Glazing Syndromes), I’m showcasing them here because business leaders driving AI strategies need to know about these developments for a few vital reasons:

- the breathtaking pace of AI innovation underscores the need for business leaders to become actively and urgently engaged with AI initiatives;

- the GenAI portfolios of leading tech vendors are expanding at an equally dramatic pace as those technical innovations are being rapidly infused in business-ready models, tools, and solutions;

- the early-adopter deployments by companies in a wide range of industries — including UberEats, Ipsos, Jasper, Shutterstock, and Quora — are being made possible by some of these new innovations, and can provide inspiration for leaders across other industries; and

- while OpenAI’s ChatGPT —and Microsoft’s deployment of it — about 19 months ago gave it first-mover status, Google Cloud has made enormous progress in assembling and constantly updating what is likely the industry’s most-advanced set of GenAI capabilities.

In fact, the recent launch of Gemini 1.5 Flash is part of a broader new-product blitz from Google Cloud designed to have Vertex AI be perceived by customers as “the most enterprise-ready” GenAI platform.

In December, Google Cloud launched its multimodal Gemini model in three sizes: Ultra, Pro, and Nano. A few months later it rolled out 1.5 Pro with an emphasis on the longer context window to enable the models to handle more-detailed instructions, and now Gemini 1.5 Flash is the latest addition to the portfolio.

The new model is “optimized for high-volume, high-frequency tasks at scale, is more cost-efficient to serve, and features our breakthrough long context window,” Google Cloud said. For some potential customers who’ve experienced sticker-shock from the original Gemini models, Gemini 1.5 Flash is designed to offer solid performance at a more-manageable price point. Google Cloud believes that Gemini 1.5 Flash is ideally suited for applications such as retail chat agents, document processing, and as research agents that can scan and reason over vast repositories.

According to Google Cloud, Gemini 1.5 Flash dramatically outperforms competitors in the key areas noted at the top of this article. From the Google Cloud press release announcing the new model, here is some additional context on those GPT comparisons cited above:

“Most important of all, Gemini 1.5 Flash’s strong capabilities, low latency, and cost efficiency has quickly become a favorite with our customers, offering many compelling advantages over comparable models like GPT 3.5 Turbo:

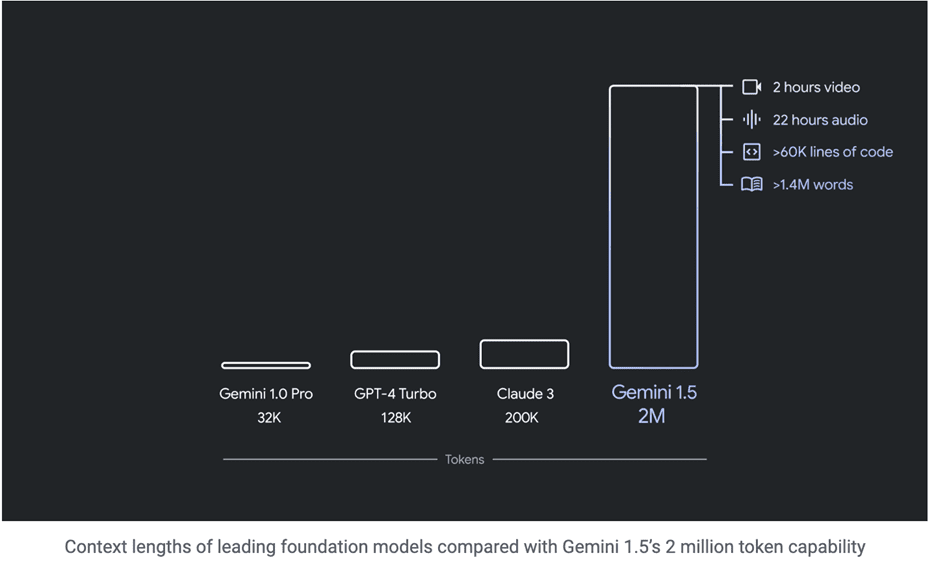

- 1 million-token context window,which is approximately 60x bigger than the context window provided by GPT-3.5 Turbo

- On average, 40% faster than GPT-3.5 Turbo when given input of 10,000 characters2

- Up to 4X lower input price than GPT-3.5 Turbo, with context caching enabled for inputs larger than 32,000 characters”

Google Cloud offered some customer perspectives on their experiences with Gemini 1.5 Flash.

Uber Eats used the new model to build the Uber Eats AI assistant, which lets Uber Eats customers use natural language to “learn, ideate, and discover and shop for things in our catalog seamlessly.” Uber Eats said the new model accelerates response times by 50%.

Ipsos: the research firm’s global head of Generative AI, JC Escalante, said in the press release, “Gemini 1.5 Flash makes it easier for us to continue our scale-out phase of applying generative AI in high-volume tasks without the trade-offs on quality of the output or context window, even for multimodal use cases. Gemini 1.5 Flash creates opportunities to better manage ROI.”

Final Thought

No doubt OpenAI and Microsoft are hard at work to deliver the next iteration of their own models that can perhaps transcend what Google Cloud has just delivered, and over time the resulting back-and-forth competitive efforts from those three companies and others will generate huge value for customers.

And yet again, the biggest winners in the Cloud Wars will always be the customers.

The AI Ecosystem Q1 2024 Report compiles the innovations, funding, and products highlighted in AI Ecosystem Reports from the first quarter of 2024. Download now for perspectives on the companies, investments, innovations, and solutions shaping the future of AI.