Google DeepMind has introduced the first AI-powered robotic table tennis player capable of competing at an amateur human level.

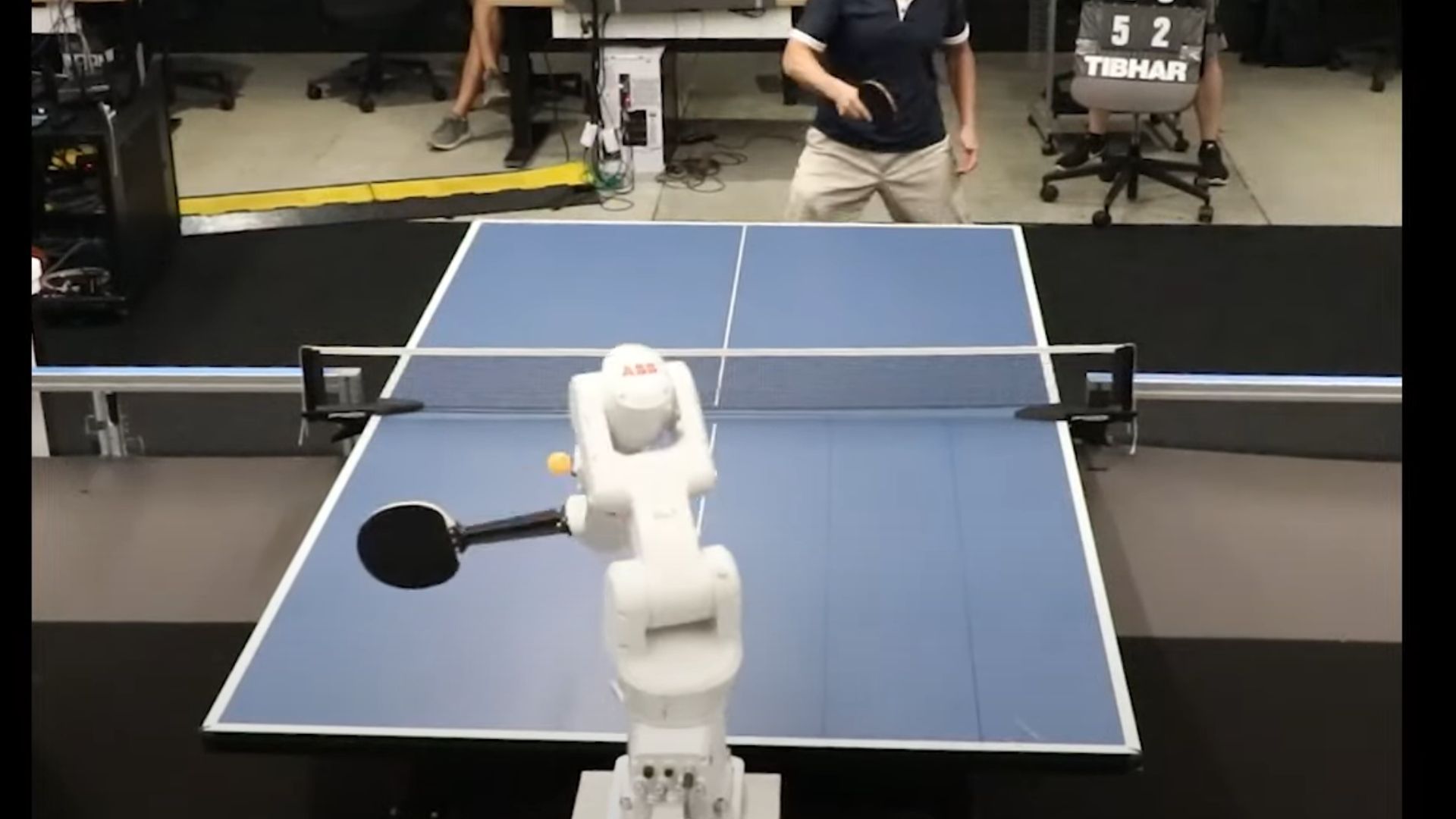

The system features an industrial robot arm, the ABB IRB 1100, integrated with custom AI software from DeepMind.

The robot’s performance was tested in 29 matches against unseen human players of varying skill levels. It won 45 percent of the matches overall. It lost to all advanced players but won 100 percent against beginners and 55 percent against intermediates, showcasing solid amateur-level ability.

“This work takes a step towards that goal and presents the first learned robot agent that reaches amateur human-level performance in competitive table tennis,” said researchers in the study.

Matching human skill

Despite recent advancements, achieving human-level accuracy, speed, and adaptability in robotics remains challenging.

In contrast to strictly strategic games like Go or Chess, table tennis necessitates acquiring sophisticated low-level skills and strategic plays. It frequently prefers boldly executable actions over ideal plans.

Researchers believe this makes table tennis a perfect test ground for developing robotic skills such as rapid movement, instantaneous decision-making, and system architecture for direct human competition.

Playing a full competitive match against human opponents is a vital test for robotic growth in both physical and strategic tasks, as no prior research has successfully tackled this difficulty.

Adaptive AI in Table Tennis

The team’s approach led to a robot that can play competitively at a human level and is enjoyable for humans to play against.

This was achieved through a modular policy design, methods for transferring skills from simulation to reality, real-time adaptation to new opponents, and user studies testing the robot in real-world matches.

The robot’s architecture combines a high-level controller that determines the optimal skill for every circumstance with a library of low-level skills. Every low-level talent concentrates on a single table tennis action, such as backhand aiming or forehand topspin.

Researchers claim that the system gathers information about the advantages and disadvantages of these skills, assisting the high-level controller in selecting the best course of action in light of the opponent’s capabilities and game statistics.

The robot is first trained through simulation using reinforcement learning, and after repeated cycles of playing in real life, its performance gets better. By using a hybrid strategy, the robot can improve its abilities and adjust to more challenging gameplay.

“Truly awesome to watch the robot play players of all levels and styles. Going in our aim was to have the robot be at an intermediate level. Amazingly it did just that, all the hard work paid off,” said Barney J. Reed, Professional Table Tennis Coach, who has been working with DeepMind, in a statement.

The team conducted competitive matches against 29 table tennis players of various skill levels, including beginner, intermediate, advanced, and advanced+, as established by a professional table tennis instructor, in order to assess our agent’s level of proficiency.

The robot was physically incapable of serving the ball, thus the humans and robot played three games under modified versions of regular table tennis regulations.

The robot won 46 percent of games and 45 percent of matches against all opponents. Furthermore, the robot won 55 percent of the matches against intermediate players, lost all of the matches against advanced and advanced+ players, and won all of the matches against novices.

According to researchers, the results strongly show that on rallies, the agent performed at an intermediate level of human play.

Additionally, study participants rated the robot highly for “fun” and “engaging,” regardless of their skill level or match outcome, and most wanted to play again.

They engaged in free play for an average of 4:06 of every 5 minutes. Even though more experienced players took advantage of some of the robot’s flaws, they still had fun and saw potential for it as a dynamic practice companion.

According to interviews, the robot had trouble with underspin, which was supported by the fact that its performance significantly decreased as spin rose.

Researchers claim that the issue offers specific goals for future training advancements because of the challenges in managing low balls and real-time spin identification.

The details of the team’s research were published in the journal arXiv.

ABOUT THE EDITOR

Jijo Malayil Jijo is an automotive and business journalist based in India. Armed with a BA in History (Honors) from St. Stephen’s College, Delhi University, and a PG diploma in Journalism from the Indian Institute of Mass Communication, Delhi, he has worked for news agencies, national newspapers, and automotive magazines. In his spare time, he likes to go off-roading, engage in political discourse, travel, and teach languages.