Key Takeaways

- Human involvement is crucial in AI for best results, including editing AI-generated content.

- Integrated AI like Gemini in Google apps is powerful when used correctly with effective prompt engineering.

- Fact-checking AI outputs is essential for accuracy, and AI is best suited for generative tasks with room for human improvement.

With AI pushing big changes in the workforce, Google has released an AI certificate on Coursera to teach regular folks about new AI tools.

I’ve used a few AI tools here and there, but I wanted to understand what Google’s AI course actually teaches. So, here’s what I learned after I successfully completed the Introduction to Google AI Essentials course.

The “Human in the Loop” Approach Gets Better Results

The biggest takeaway from the Google AI Essentials course is simple: AI requires human involvement. That’s where the “human in the loop” approach, which encourages manual review and revision of AI-generated content, becomes relevant.

After using AI, you should always ask where human involvement might be necessary for the best results. If you’re writing a document that must be accurate, for example, fact-checking all AI research is necessary to avoid including AI hallucinations.

Remember: AI isn’t meant to replace your work. Instead, you should use it to augment and improve your work.

Integrated AI Is Most Powerful When You Use It Correctly

Another important lesson I took from Google’s AI Essentials Course is how to apply AI correctly. Within the course, Google expects students to use AI to draft a work email, create AI-drafted PowerPoint presentations and documents, and even examine the ethics of AI tools. One of its most interesting labs involves using Gemini.

Gemini is a powerful integrated AI tool that you can use on the sidebar or within Google suite apps like Gmail, Docs, Sheets, and Slides. You can use it for brainstorming, drafting, and revising content that you produce in Google suite apps. To use it effectively, Google recommends:

- Developing effective prompt templates

- Asking Gemini questions when you need to brainstorm

- Using the Refine and Shorten prompts to improve Gemini output

- And finally, keeping AI on hand by enabling the Gemini sidebar

As with any other generative AI tool, you need to write accurate and effective prompts with Gemini to get the best results. Gemini is uniquely adapted to the problems you might have in Google suite apps, however, so you’ll find that it’s a smart work companion.

The Right Strategy Is Essential for Prompt Engineering

Learning about prompts (the instructions that you provide to AI to help it generate the output you want) is essential. They’re also difficult to write effectively—it’s like learning a whole new skill. While improvements in AI models (such as the shift from GPT-3.5 to 4) have made AI-generated content better than ever, you won’t get useful outputs without good prompts. Some things to keep in mind when writing prompts include:

- AI results get better when you provide more context

- LLMs can’t provide useful information about recent events if their training data isn’t up-to-date

- You should provide data or examples when possible

- And finally, you should guide the AI on what you want it to do rather than what you don’t want it to do

In fairness, these prompting techniques were methods I was already aware of, but they’re certainly useful for those just getting to grips with it.

Fact-Checking AI Is Simpler Than It Seems

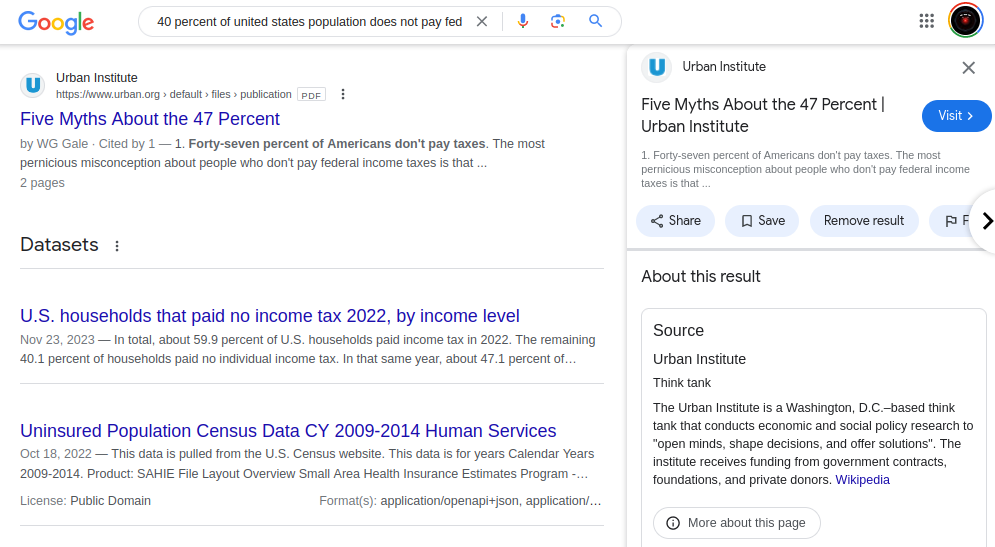

In order to visualize how AI arrives at the right answer (and how it can hallucinate wrong ones), it’s important to understand how generative AI works. AI tools like Gemini and ChatGPT are a form of large language model (LLM) that produce outputs based on predicted patterns in human language.

In other words, these tools don’t understand what they’re generating, but they do use statistics to produce original content that is usually accurate and relevant to the given prompt. Unfortunately, bias, poor-quality training data, a lack of relevant data, prompt phrasing, and other factors can lead LLMs to produce false answers.

Generative AI generally arrives at the correct answer. The course really hammered home that the best way to produce accurate AI-generated content is to fact-check the information the AI produces. Rather than assuming that AI has expertise, you should treat it as an aggregate of both good and bad content that requires revision and refinement.

It links back to the “human in the loop” principle. The AI is only really as useful as the inputs I can give it, and due to limitations in its learning and the technology, I should also check its outputs to make sure they’re accurate.

AI Isn’t Required For Every Job

As they become capable of things like summarizing video meetings, AI tools are becoming more useful than ever. But they aren’t right for every job, and the Google AI Essentials course recommends taking advantage of your unique strengths. Through the Google AI Essentials course, I found that AI was ideal for tasks like:

- Writing first drafts of emails and other documents

- Generating supplementary material, like flashcards or summaries

- Researching basic topics (that would exist within the data set the AI is trained on)

During the course, I also discovered the weak points of many AI tools. They can’t confirm the accuracy of information, and they’re sometimes prone to hallucinations. AI tools are excellent for drafting and revising, but they also can’t replace your unique voice, and their knowledge is limited to their data set.

If your task meets some or all of the following criteria, it’s usually better to avoid handing it off to AI:

- Your task requires recent or highly accurate information

- Your task requires in-depth research (such as finding new citations for a research paper)

- Your task is socially or morally complex or requires careful judgment

- Your task is unstructured and unpredictable

It’s not a fine science, but I find that once you start using AI tools, you quickly become adept at figuring out what they’re best at handling.

Who Should Take Google’s AI Essentials Course?

The Google AI Essentials course provides a much-needed reminder that incorporating AI into your career thoughtfully is important if you want to avoid its drawbacks. Some signs to look for when determining if AI is right for a job include:

- Is your problem “generative?” In other words, do you need to create text, audio, images, or other content?

- Could you critique and improve the AI-generated results? For example, do you have a specific image in mind when attempting to generate an advertisement?

- Will you be able to review the AI-generated content? Can you verify its accuracy and completeness?

Beyond these five points, I found the Google AI Essentials course contains a trove of learning material perfect for those unfamiliar with AI. However, It isn’t ideal for tech-savvy learners or those with an existing understanding of AI who may prefer in-depth alternatives like like IBM’s AI courses.

Unfortunately, the course costs $49, though is included with Coursera Plus subscriptions. If you decide to dive deeper into AI with Google, however, consider taking a few classes with your month of Coursera—there’s a practically limitless variety of resources just waiting to help you apply AI to your career.