Sign up for the Slatest to get the most insightful analysis, criticism, and advice out there, delivered to your inbox daily.

Last Tuesday, I returned from a blissfully computerless vacation abroad and logged back into work, only to find an uninvited visitor in my inbox: Gemini, Google’s A.I. assistant, had been deployed to my workspace without any warning. When I logged into Gmail for the first time in two weeks, a side panel popped out, introducing itself like the ghost of Clippy past. “Hi Shasha, how can I help you today?” it asked, along with offers of a few options like summarizing conversations, drafting an email, and showing me unread emails—the latter of which would have been immediately possible had that pop-up not been in my way to begin with.

A.I. is controversial, and for good reason. Generative tools such as the large language model ChatGPT, or image generators like Midjourney, simultaneously threaten livelihoods and spread harmful misinformation. That’s not to mention the enormous environmental impact these resource-draining systems have. There is no doubt A.I. is here to stay, but the question is in what capacity and for whose benefit. And while there is no blanket answer, ethically speaking, it should always involve personal consent, especially when that consent is being presumed by a billion-dollar company.

So I headed to my user settings to turn Gemini off. But if you also use Google for work and have tried to do the same, you’ll have also noticed it’s not possible. Now, this is where most people’s struggle would end, at a loss for choice, and with maybe an annoyed email to their IT guy.

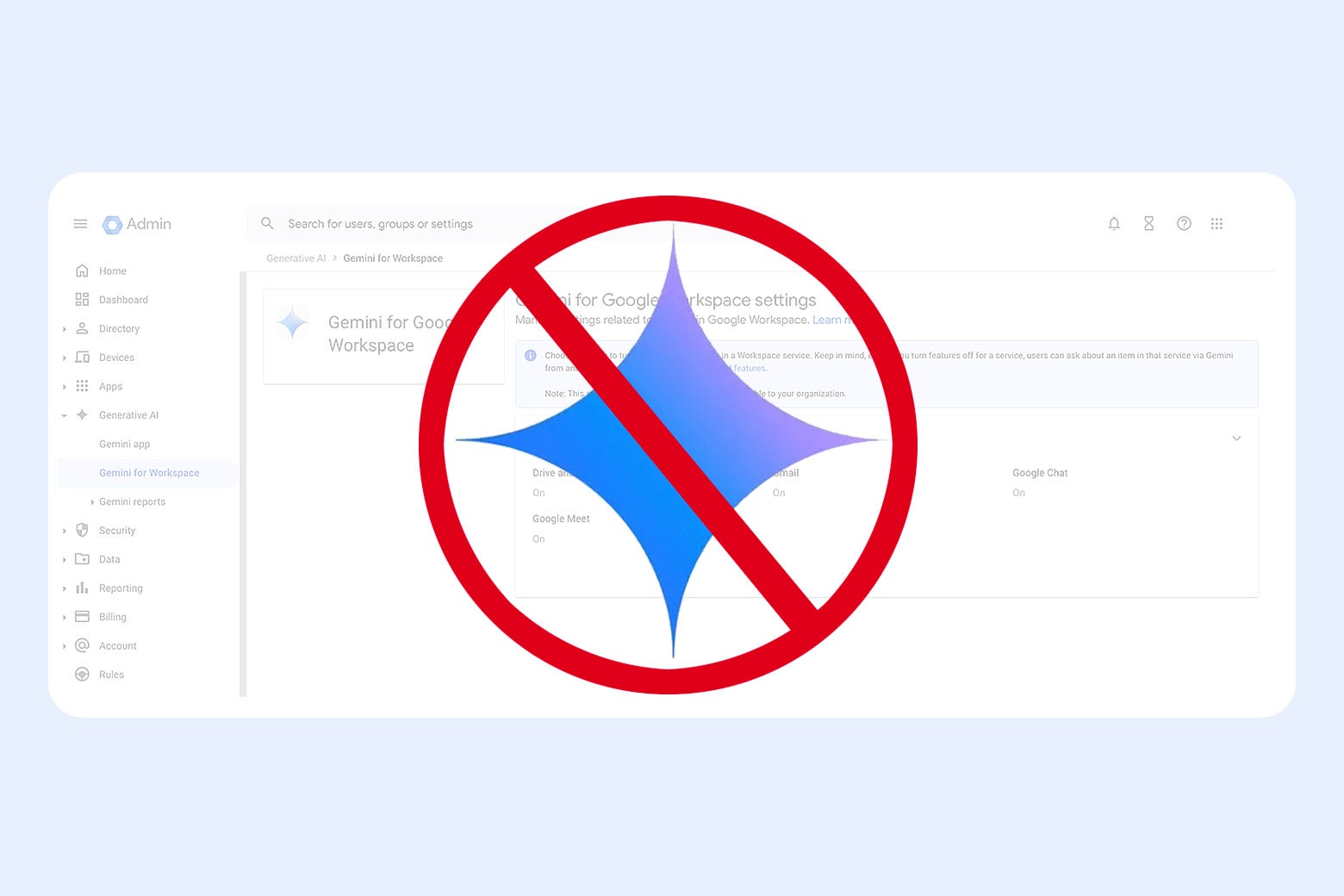

But I happen to be Slate’s annoyed IT guy—so I logged into my admin panel, located the brand-new “Generative AI” tab, and navigated to the object of my concern: “Gemini for Google Workspace” … only to find there were no settings to manage whatsoever, nor any option to turn it off.

This is particularly baffling, given that most—if not all—of Workspace’s features are customizable through the admin panel. That’s literally what we pay Google for.

For example, if I want to manage Drive external/internal viewing settings for certain users, I can do that. If I want to limit a user’s sharing abilities in Calendar, I can do that. If, for whatever reason, I want to turn those features off entirely despite being major components of a user’s workflow, I can do that. Why would Gemini be any different?

Second-guessing myself, I turned off the Gemini App instead and hoped for the desired changes to take effect (which Google warns you can take some time). But a few days passed with Gemini still asking me if I needed help finishing my sentences in Gmail, so like any other irritated and long-standing denizen of the internet, I headed over to Reddit to see what other sysadmins had to say about this. What I found unsettled me.

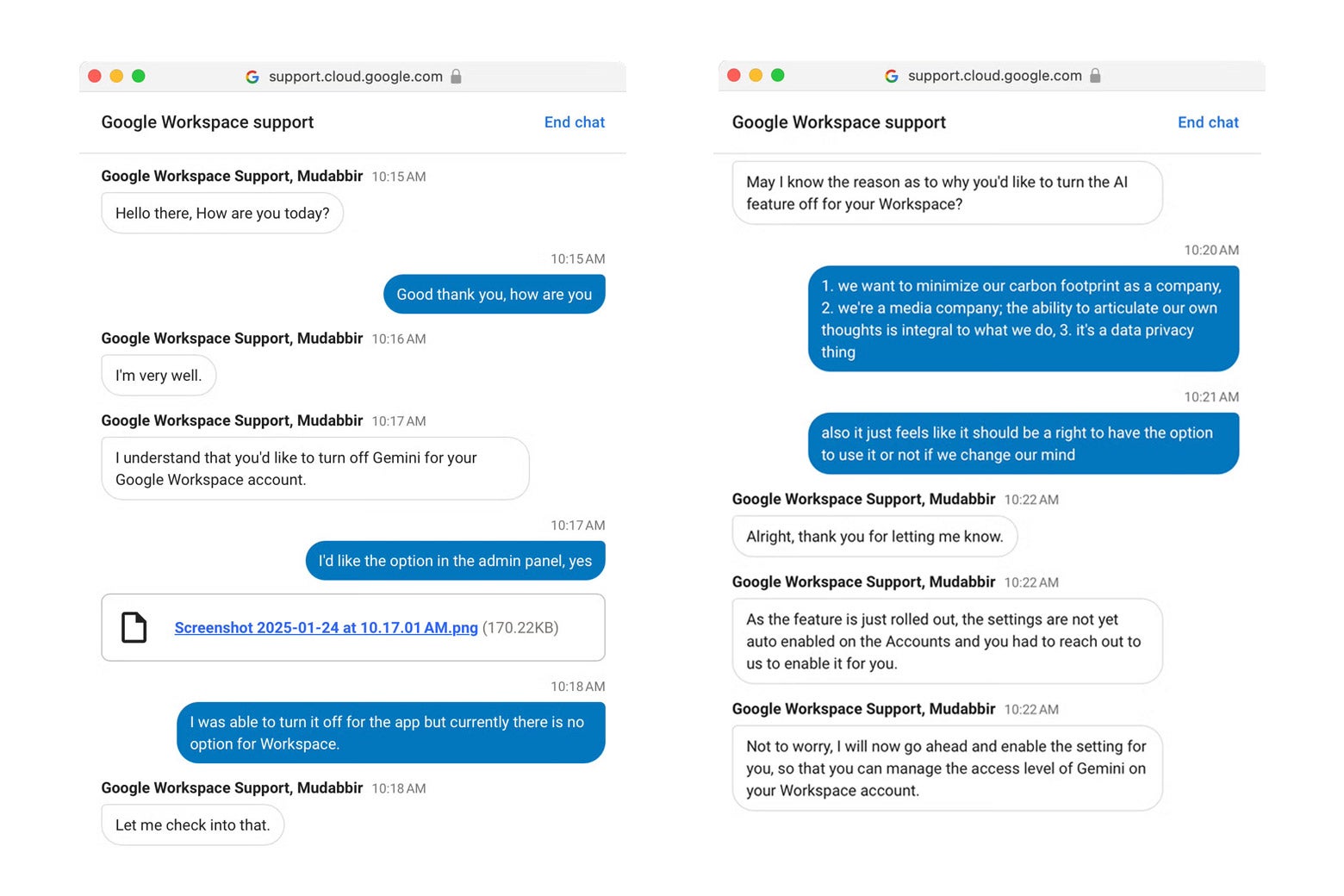

In one post, Reddit user heretocomplain123 described how they had also encountered Gemini for Google Workspace, but couldn’t immediately turn it off. Eventually, they were able to find the solution after contacting a Google rep. “I chatted with customer support, and at my request they ADDED the settings, after which I was able to toggle off all Gemini for Workspace settings (gmail, etc.),” the user wrote.

You had to go ask Google for the ability to turn it off.

Thanks to the screenshots OP attached, many of us were able to follow suit, some waiting a few hours in the customer support queue, some waiting several days for Gemini to finally go away. I waited 10 minutes. Here are screenshots from my own chat with Google Workspace Support.

According to what I just experienced, and what many other paid users are experiencing, Google has made opting in to generative A.I. the default. You have to go the extra mile and wait, sometimes hours, in the support queue to even have the option to opt out. Just as Meta’s search bar doubles as an A.I. search, or how the Transportation Security Administration rolled out default biometric screening at airports, these are forms of a manipulative design strategy to all but force you to engage with and train A.I. (It is also an excellent example of enshittification.)

While the ethics of consent are the most immediate here, it’s also critical to acknowledge generative A.I.’s well-documented environmental impact, as well as its growing role in modern warfare, like Meta opening its A.I. model to the U.S. military, further harming the environment. The push to normalize the technology’s usage, often without user input, raises urgent questions about accountability and sustainability.

Google Support chat assured myself and others in the subreddit that the option to opt out of Gemini for Google Workspace would be rolled out for everyone … eventually. But the issue at hand is that Gemini for Google Workspace was rolled out as a finished product without the ability to opt out or manage its settings. The issue isn’t just about Gemini’s intrusiveness—it’s about the erosion of user autonomy. By making A.I. opt-out rather than opt-in, Google is setting a dangerous precedent: the presumption of consent by default.

Utpal Dholakia describes this default in an article for Psychology Today as a technique used by anyone “interested in influencing others’ behavior,” as well as representing an asymmetrical power dynamic. “The manipulator is powerful, and the manipulated is weak,” he wrote. Governments and businesses use behavioral nudges to influence citizens and employees—not the other way around. “If I tried to nudge the U.S. government to lower my tax bill, an outcome that I might, no doubt, consider to be virtuous and acceptable, I would very quickly find myself in prison.”

Default opt-ins create a massive power imbalance—one that could “produce tremendous and irreversible harm to a great many people,” he adds.

In a recent interview, Sam Altman, CEO of OpenAI, one of the largest and most influential A.I. companies in the U.S., stated that “the entire structure of society will be up for debate and reconfiguration” as A.I. continues to evolve. If this is the case, then this social contract for how we use—or are made to use—A.I. is actively being negotiated in instances like Google pushing Gemini on our workspaces.

It is unethical that I had to reach out to Google Support to ask for the option to turn off Gemini for my workspace, because all users should have the option to opt in or out, if they so choose. Using our right to say no where we can, as annoying or futile as it may seem, is crucial, as it shapes the future of an already skewed power dynamic where saying no might not be possible anymore.

Some might argue that this approach is necessary to ensure widespread adoption and improve the tool through user feedback. Because A.I. models rely on data to evolve, making Gemini a default feature guarantees engagement. While this reasoning may seem valid, it ignores the ethics of consent. Presuming consent by default undermines trust and sets a dangerous precedent for how tech companies interact with users.

While data on A.I. usage suggests massive popular interest, it’s hard to take those numbers at face value in cases where users are automatically opted in to the experience. Just because we all woke up with U2’s Songs of Innocence on our iPhones doesn’t make it the most popular album of all time. This unreliable and inflated data then fuels a vicious cycle: Big tech companies double down on A.I., deploy products like Gemini for Workspace that users can’t opt out of, and then expect immediate returns for their shareholders—while waiting for users to embrace it enthusiastically.

Gemini for Workspace is now disabled for everyone at Slate until Google offers users the option to opt in themselves. As A.I. becomes more commonplace in our digital lives, especially when there is so little legislation to prevent its misuse, we must demand accountability and the right to say no. Opting out should never be a premium feature—it’s a basic right.

Note: No A.I. assistance was used in the writing of this article.