Google is gearing up to unveil its latest AI language model, Gemini 2.0, in December, according to insider sources from The Verge.

The Verge’s sources suggest that Gemini 2.0 may not deliver the significant performance improvements that the team, led by Deepmind founder Demis Hassabis, had anticipated. Still, the model could introduce some interesting new features, according to The Verge.

The AI leak account “Jimmy Apples,” known for a few accurate predictions in the past, reports that some business customers already have access to the new model.

Other tech companies are pushing forward with their own AI projects. Elon Musk’s xAI is training Grok 3 using 100,000 Nvidia H100 chips at its Memphis supercomputer facility, while Meta is training Llama 4 with even more compute.

Ad

AI companies seek new approaches as progress slows

Google’s potentially underwhelming progress with its flagship LLM may explain the company’s recent acqui-hire of Character.ai for up to $2.5 billion, primarily to bring back renowned AI researcher Noam Shazeer and his team.

Shazeer, who co-developed the Transformer architecture in 2017, is reportedly working on a separate reasoning model at Google to compete with OpenAI’s o1. Meta is also developing similar models. One of the driving researchers behind the o1 architecture, Noam Brown, left Meta for OpenAI. At Meta, he achieved reasoning breakthroughs such as CICERO.

These new models aim to use more computing power during the inference phase—when the AI processes information—rather than focusing mostly on pre-training with large datasets. Researchers hope this approach will lead to better results and a new scaling horizon.

Signs of plateau in LLM capabilities

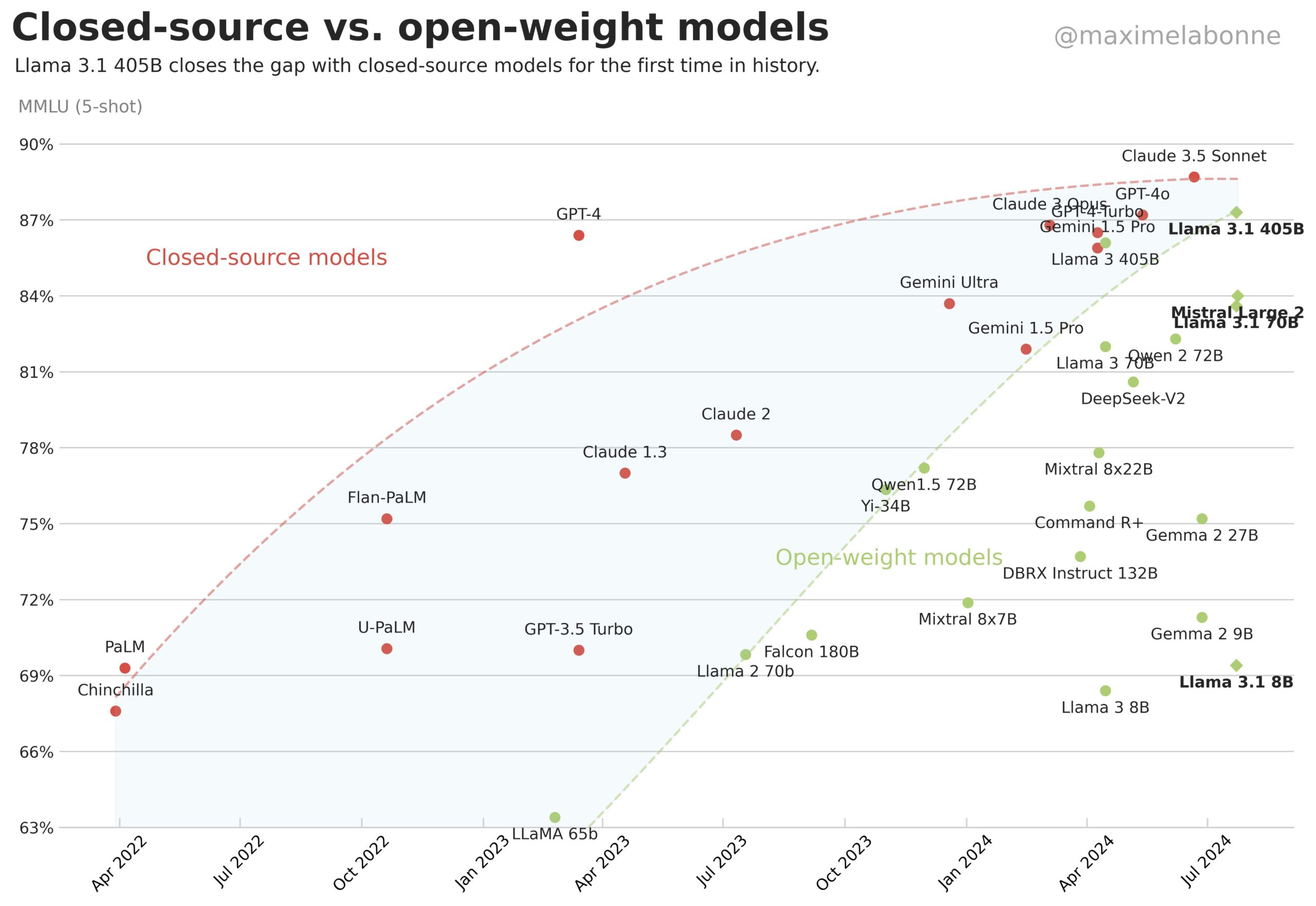

The past two years have shown AI language models becoming more efficient but not necessarily more capable, suggesting possible limits to current approaches. Bill Gates has noted that the improvement from GPT-4 to GPT-5 will likely be smaller than previous upgrades.

Moreover, the capabilities of different models appear to be converging, making language models potentially undifferentiated commodity products with limited competitive advantage despite high development and operating costs. This is a significant threat to highly valued AI model companies such as OpenAI and Anthropic.

Recommendation

Another indication of the plateau thesis: OpenAI has just confirmed that a new model, internally considered as a potential successor to GPT-4, will not be released this year, despite looming competition from Google Gemini 2.0.

Similarly, Anthropic is rumored to have put a previously announced version 3.5 of its flagship Opus model on hold due to a lack of significant progress, instead focusing on an improved version of Sonnet 3.5 that emphasizes agent-based AI.